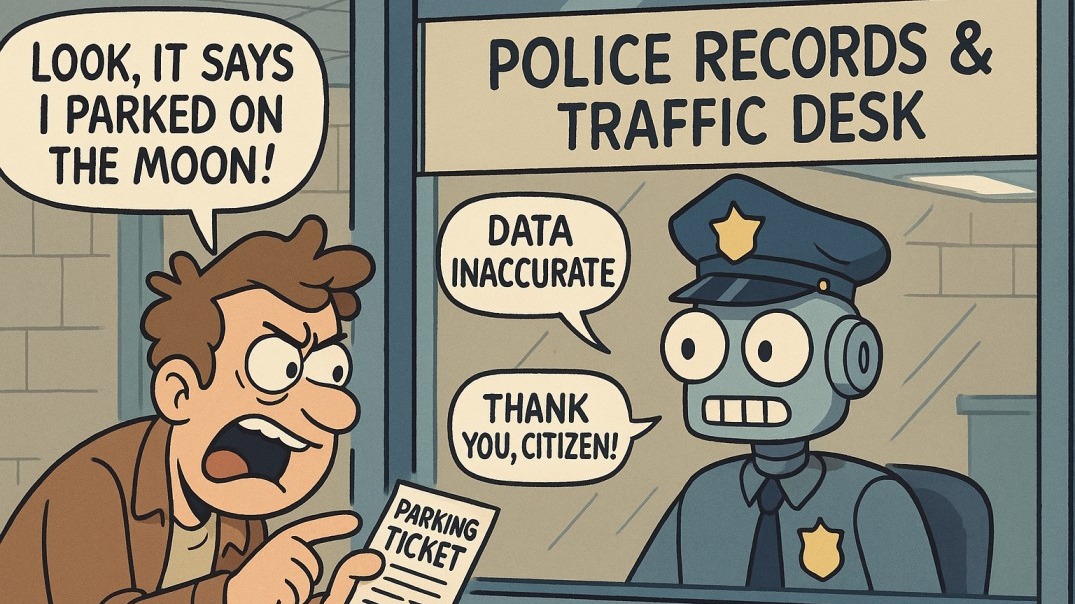

Understanding Artificial Incompetence and Its Risks

Artificial Incompetence refers to a version of AI systems that falters in delivering valuable insights or solutions, potentially endangering community safety and public service efficiency. Law enforcement and public safety professionals must recognize the implications of inadequate or inefficient AI tools and how these failures impact community trust and perception.

Why Community Trust Matters in Law Enforcement

Community trust serves as the backbone of effective policing. When technology fails, the erosion of this trust can be swift and damaging. Reports of algorithmic biases or communication failures can lead to skepticism among citizens concerning law enforcement's ability to keep them safe and serve them effectively. Upholding public trust requires transparency and accountability in police operations, particularly as technology increasingly mediates public interactions with law enforcement.

Importance of Ethical AI Integration

The integration of AI in law enforcement should not merely be about efficiency but also ethical implications and outcomes. The development and deployment of AI tools must include careful monitoring to ensure that community safety remains a priority. This ethical approach should involve collaboration with community stakeholders and experts to create AI systems that support fair policing and public safety innovation.

Data Analysis and Predictive Policing: Balancing Benefits and Risks

Data analysis and predictive policing offer valuable potential for crime prevention. However, poorly designed AI tools can lead to misinformed decisions, exacerbating tensions within communities. Policymakers and law enforcement agencies must tread carefully, balancing technological adoption with robust accountability measures. Investing in officer training on the ethical use of AI and data collection can promote responsible application and better outcomes in crime reduction.

Best Practices for Enhancing Police Training Techniques

Effective police training programs must adapt to the evolving tech landscape. Integrating skills such as effective communication, emotional intelligence, and crisis management into training curriculums can empower officers to better navigate interactions with the community. Moreover, encouraging the use of AI tools in training will facilitate a more knowledgeable and resourceful workforce.

A Vision for the Future: Smart Policing and Enhanced Public Safety

Looking ahead, the goal must be to harness technology in a manner that builds community engagement and fosters public safety. As cities increasingly adopt smart policing techniques, law enforcement agencies should ensure that residents are part of the conversation, shaping policies and strategies that will ultimately affect their lives. Effective emergency response, transparency, and real-time monitoring can create an environment where citizens feel safe and valued—a crucial component for fostering resilience and trust between communities and their police.

In conclusion, artificial incompetence in AI reflects deeper risks for law enforcement and community safety. For stakeholders in the public sector, it is imperative to address these challenges through innovative training, transparent communication, and ethical AI integration to build a future where public safety and community engagement thrive.

If you're committed to enhancing community safety and public trust, now is the time to evaluate how effective your current technology solutions are. Explore best practices, integrate ethical AI, and foster a culture of accountability in your operations.

Add Row

Add Row  Add

Add

Add Element

Add Element

Write A Comment